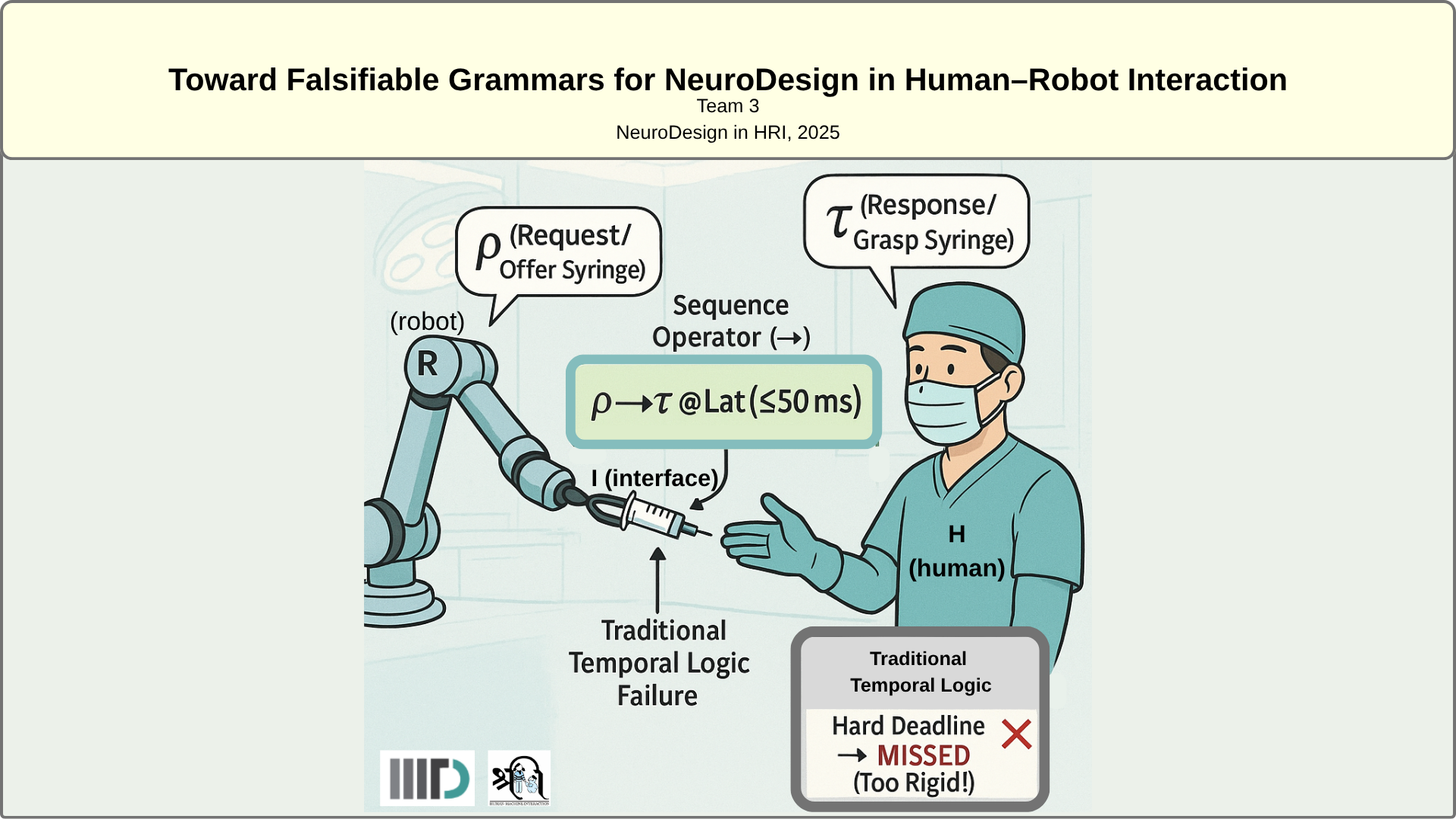

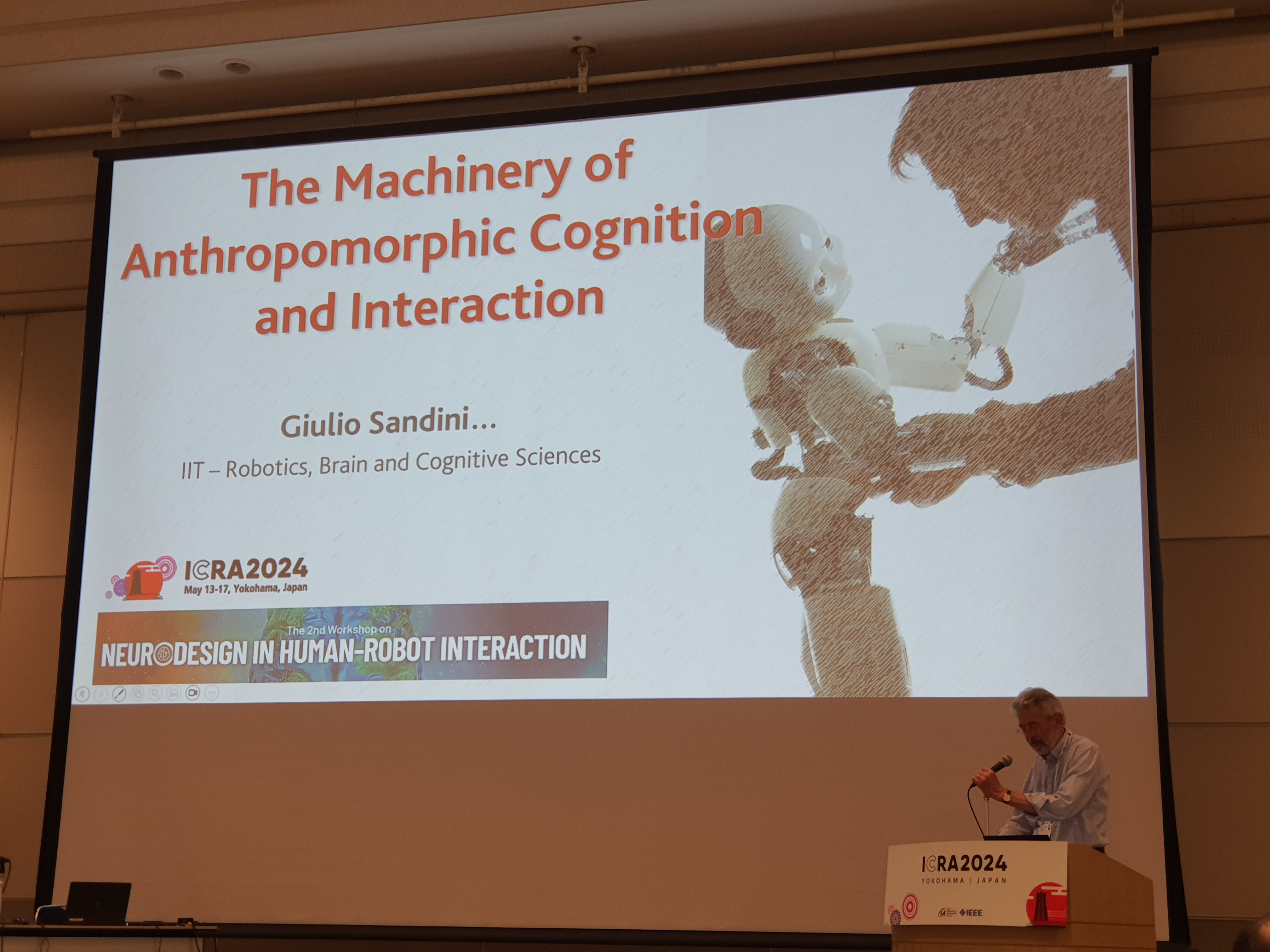

🧠 What Is NeuroDesign in HRI?

NeuroDesign in Human-Robot Interaction (HRI) is an emerging interdisciplinary approach that brings together principles from neuroscience, cognitive and behavioral psychology, robotics, AI, and human-centered interaction design to create human-robot systems that are not just about performance metrics—but creating experiences that deeply intuitive, ergonomic, emotionally resonant, and cognitively aligned with the human brain.

It emphasizes designing at every level of the system: from the physical form factor of the robot to its internal software, AI and control logic; from multi-modal sensing strategies (e.g., EEG, EMG, IMU, voice, vision, etc.) to interaction flows and feedback mechanisms—every element is co-designed to create seamless, brain-centered experiences. Whether it’s a robot adjusting its behavior based on your mental fatigue, a soft exosuit synchronizing with your intention and muscle activity, or a socially assistive robot responding to your emotional state through haptics and voice, NeuroDesign focuses on creating interactions that feel natural to the brain and body—smooth, engaging, and human-centered.

At its core, NeuroDesign covers both cognitive human-robot interaction (cHRI) and physical human-robot interaction (pHRI). It supports four fundamental modes of brain-body-robot interaction, each representing a bidirectional loop between human and machine:

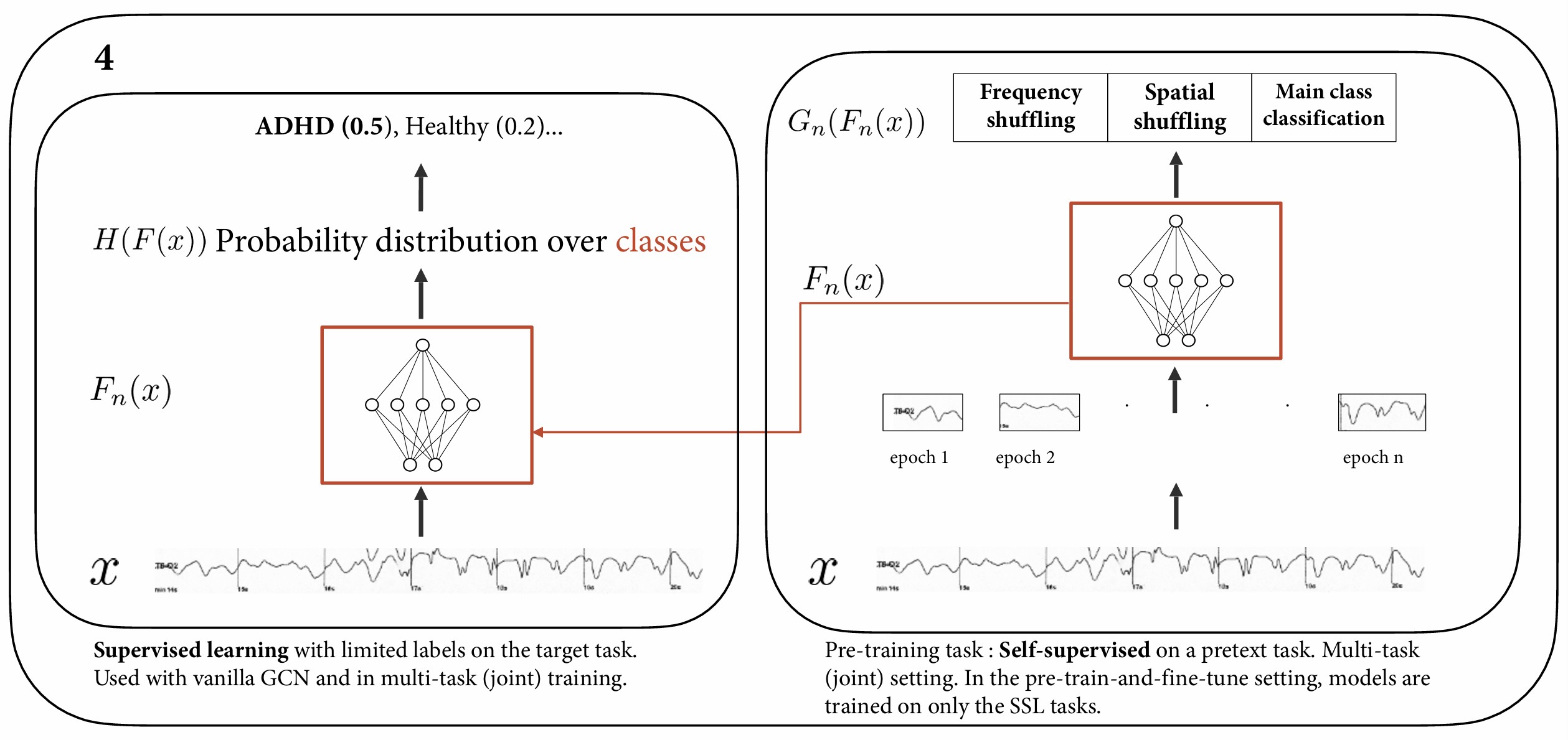

🔺 (1) Human Brain ⟷ Robot Brain (cHRI)

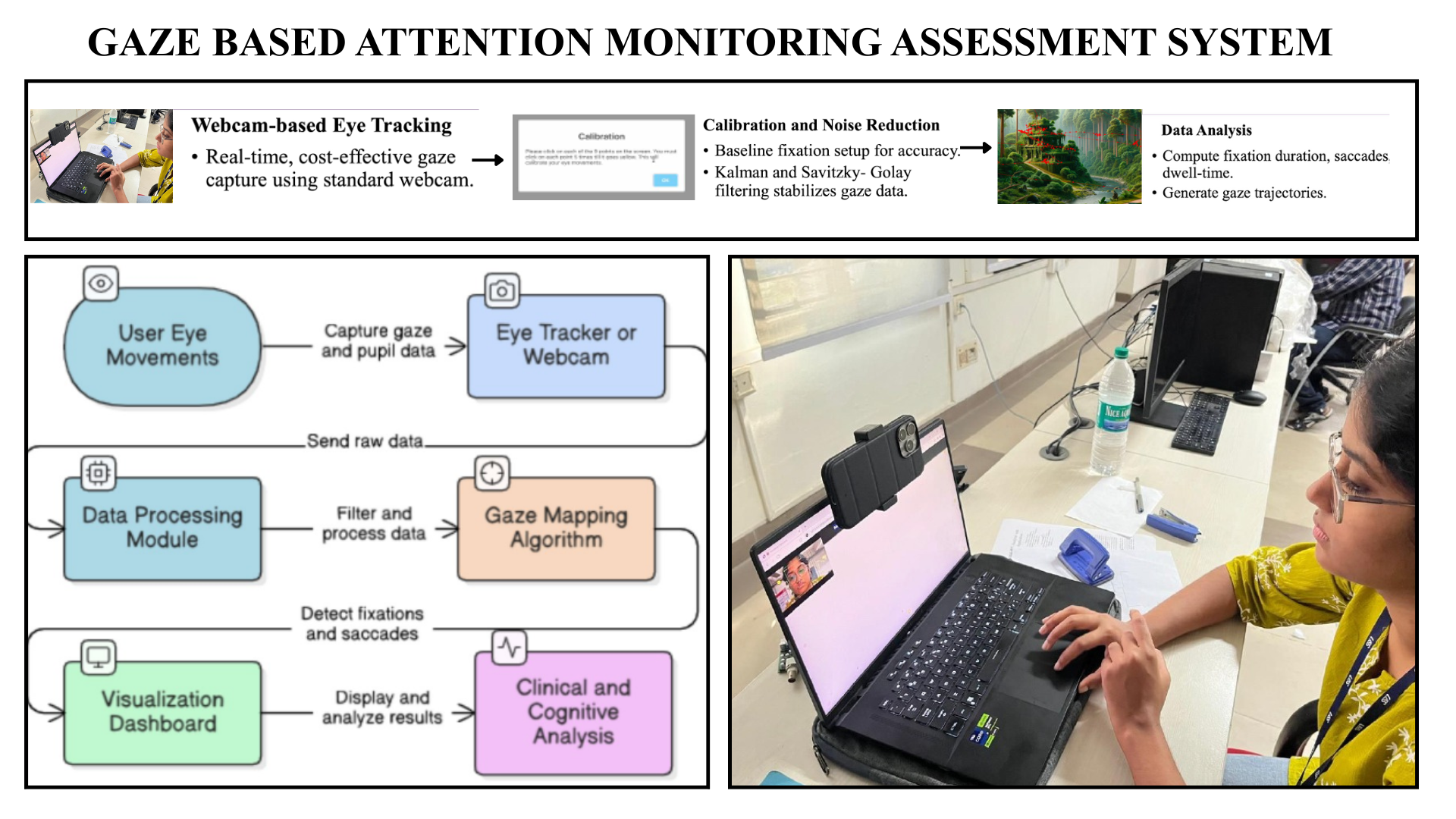

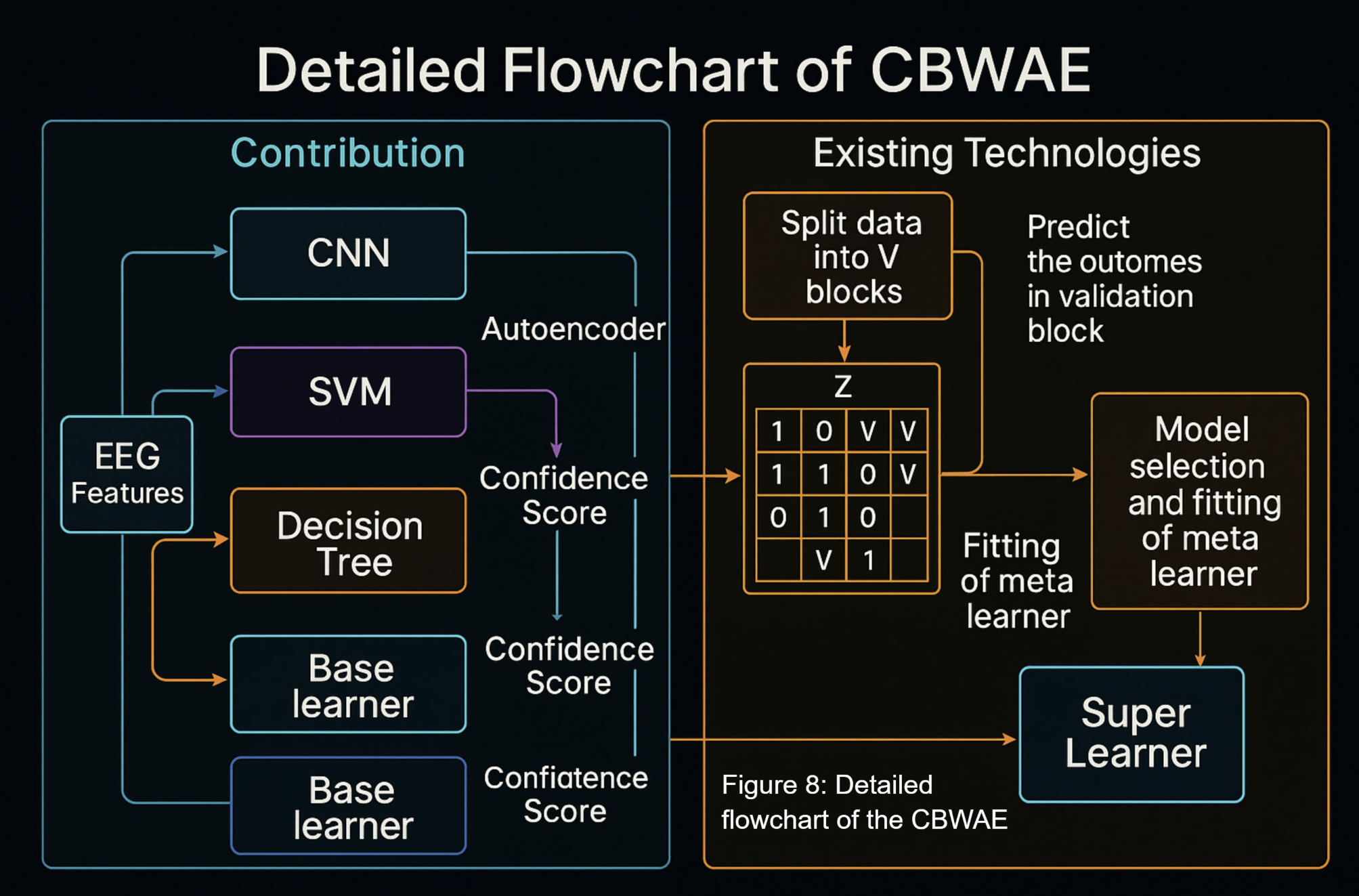

User intent and cognitive states are decoded through neural signals (e.g., EEG), attention tracking, or other neural-sensing modalities to guide robotic decision-making and adaptive control (e.g., shared autonomy). Conversely, the robot’s “brain” can communicate back to the human brain using neuromodulation or non-contact feedback cues such as visual patterns, or auditory signals—enabling a direct, closed-loop interface between cognitive states and machine intelligence.

🔺 (2) Human Brain ⟷ Robot Body (cHRI)

Thought-driven interfaces (e.g., BCIs) translate cognitive states into physical action, enabling control of robotic limbs, exosuits, or tele-operated robots via imagined movement, motor imagery, or mental workload. The robot body, in turn, conveys its intention or status back to the user through expressive gestures, non-contact body movement, visual indicators, speech, or sound—supporting fluid two-way cognitive communication.

🔺 (3) Robot Brain ⟷ Human Body (cHRI)

Intelligent robotic systems adaptively shape the user's experience by delivering context-aware feedback—via haptics, visual cues, auditory signals, or neuromodulation (e.g., brain or muscle stimulation)—based on the user’s physiological or affective state. At the same time, users can interact with the robot using body language, facial expressions, or voice commands, and other non-contact modalities to interact with the robot—allowing mutual understanding without the need for physical touch.

🔺 (4) Human Body ⟷ Robot Body (pHRI)

Here, physical interaction becomes central, as muscle activity, joint movement, and biomechanical cues (sensed via EMG, IMUs, or force sensors) drive collaborative behavior. This includes co-manipulation, shared locomotion, and synchronized motion between the human body and robotic components—enabled through wearable robots, soft exosuit, Co-Bots, and/or force-based feedback systems.

These interaction loops are made possible through multi-modal sensing and actuation, combining EEG, EMG, IMU, skin conductance, eye tracking, and more. Achieving seamless integration requires careful co-design of software, hardware, AI, and physical interfaces to ensure that robotic systems align with the user's natural cognitive and physical processes.

Ultimately, NeuroDesign invites us to imagine and build technologies that are not only usable but embrace the richness of human cognition and embodiment. It informs us to design robots and interactive systems that understand us, respond to us, and evolve with us—in ways that are emotionally meaningful, neurologically intuitive, and functionally empowering.

==================================================

📝💡 Suggested Submission Topics

We welcome submissions across a wide spectrum of human-robot interaction, including (but not limited to):

* Affective & Social Robotics

* Brain-Machine / Brain-Computer Interfaces (BCI)

* Wearable & Assistive Robots

* Exoskeletons & Rehabilitation Systems

* Human-AI Co-adaptation

* Embodied AI / Large Language Models for HRI

* VR/AR, XR, & Metaverse-based Interaction

* Haptics, Teleoperation & Sensory Feedback

* Cognitive & Physical Human-Robot Interaction (cHRI/pHRI)

* Neuroergonomics & Human Factors

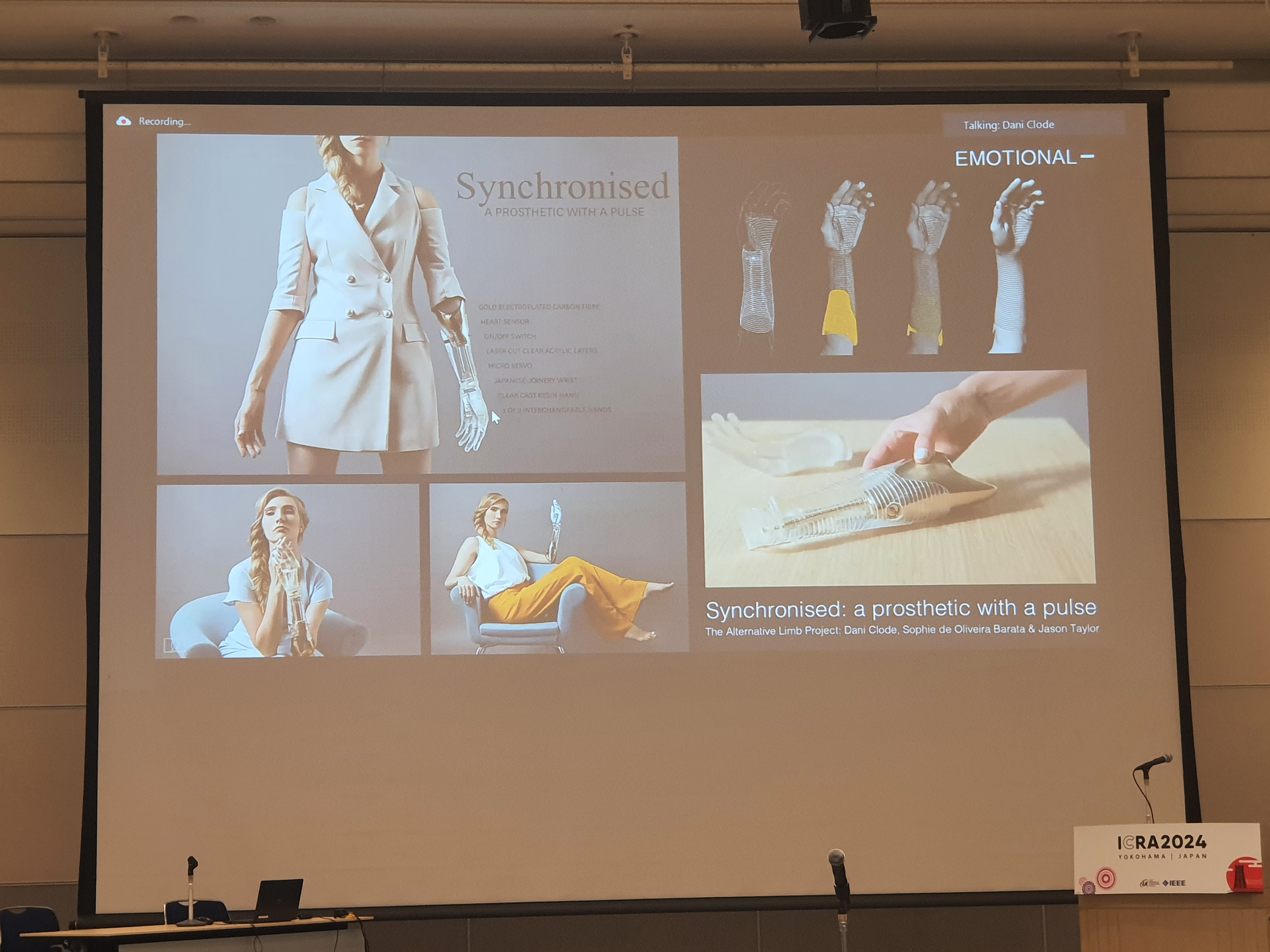

* Soft Robotics & Bionic Systems

* Smart Environments & Pervasive Computing (IoT, Smart Homes)

* Multimodal Interfaces: Gesture, Speech, Emotion Recognition

* RoboEthics, Inclusion & DEI in HRI

* RoboFashion, Adaptive Wearables & Robot Skins

* Supernumerary Limbs, Human 2.0, & Intelligence Augmentation

* Anything that Connects Minds, Bodies, and Robots

==================================================

📥 NE Submission

Submission is very simple!! 😊 Please simply click the icon below to express your intent to participate.

So we can reserve your spot for a 5-minute pitch presentation. After that, you may submit "Any One" or "Any Combination" of the submission options listed below, along with your materials.

🔺 (1) Video Abstract: A 100-word summary + 1–2 minute video overview

(A concise 100-word abstract and a 1-2 minute video, offering a brief yet engaging overview of your project.)

🔺 (2) Slide Deck: A 100-word summary + 5 informative slides

(A 100-word abstract accompanied by 5 detailed slides for a short but thorough presentation.)

🔺 (3) Extended Abstract: A 2-page write-up (IEEE RAS format)

(for a more in-depth submission)

IEEE Template: https://ras.papercept.net/conferences/support/word.php

==================================================

🎤 Finalists & Presentation Format

Our selection process is on a “rolling basis”, and we aim to choose 10 projects for the final on-stage pitch presentation. We especially encourage those who have already submitted their work as posters or papers to Neuroscience 2025 or have publications elsewhere to participate in our event. This competition offers a fantastic opportunity to increase the visibility of your research globally.

* 10 projects will be selected for on-stage 5-minute pitch presentations.

* Finalists may join in person, via Zoom, or submit a pre-recorded presentation.

* All submissions (accepted or not) will be offered poster/demo space at our venue.

* We will print and exhibit posters for virtual participants.

==================================================

🖼️💻 Exhibition and Virtual Participation

All submitted projects will receive a dedicated booth for poster and/or prototype demonstrations. For participants unable to travel to Neuroscience 2025, we will display your posters "virtually" on our website.

The event is designed to be "Hybrid" to ensure that everyone has the opportunity to participate, regardless of their ability to travel to Neuroscience (@ San Diego, CA, USA). A Zoom link will be provided for your virtual participation.

==================================================

🏆 Ne Awards & Recognition

We are excited to present two prestigious award categories in this year’s competition. The "Best Innovation in HRI NeuroDesign Award" will recognize 3 outstanding projects demonstrating groundbreaking advances in Human-Robot Interaction NeuroDesign. The First Prize winner will receive €500 EUR, and the 2nd Prize winner will receive €250 EUR to support further prototyping.

In addition, the "Most Popular Project in HRI NeuroDesign Award" will honor 2 projects that win the hearts of our workshop audience, as determined by a popular vote. The First Prize in this category includes a full waiver for submission to Frontiers in Robotics and AI.

All remaining participants will have the chance to win gifts through a lucky draw, and every finalist will receive awards and official certificates in recognition of their achievements.

🔺 (1) 🧠 Best Innovation in HRI NeuroDesign Award

3 top projects selected by our expert panel based on originality, NeuroDesign alignment, and real-world potential.

Prizes:

🥇 1st Prize: $250 EUR €500 EUR

🥈 2nd Prize: $150 EUR €250 EUR

🏅 3rd Prize: €125 EUR

🔺 (2) ❤️ Most Popular Project in NeuroDesign Award

2 projects voted by workshop attendees and online audience for charm, creativity, and engagement.

Prizes:

🏆 1st Prize: Full submission fee waiver to Frontiers in Robotics and AI (APC waiver)

🥈 2nd Prize: €125 EUR

🔺 (3) 🎁 Participation Gifts

The remaining participants will receive the following gifts in a post-event drawing.

Kit x1 DIY Neuroscience Kit – Pro

Ras x1 Raspberry Pi 5 (16GB RAM)

AIH x1 Raspbery Pi AI HAT+ (13 Tops)

uMy x1 uMyo wearable EMG sensor

So x1 Souvenir from Eindhoven

All finalists will receive official certificates and feature opportunities on our website and post-workshop publications.

==================================================

📅 Key Dates

🔺 * Submission Deadline: November 10, 2025

🔺 * Competition Day & Time: November 19, 2025

@ 9:00–10:30 AM (Pacific Time) | 12:00–1:30 PM (Eastern Time) | 6:00–7:30 PM (Central European Time) | 10:30 PM–12:00 AM (Indian Standard Time)

==================================================

🔗 Show your intent of participation: https://forms.gle/TeywZB55NchRXeWn6

👉 Material submission form: https://forms.gle/fQMxJtkXb8JEU2WR6

For any questions, feel free to reach out via our website submission form, or email us to: neurodesign.hri@gmail.com

Let’s shape the future of human-robot interaction—by design, for the brain.

Best regards,

The NeuroDesign in Human-Robot Interaction Student EXPO & Competition

https://www.neurodesign-hri.ws/2025

.png)

.png)

.jpg)

.jpg)

.png)